Async/await inference in Firefly: Part 3

In the last post, we saw how to implement colorless async/await via effect inference. In this post, we will extend the solution to solve a common problem in web apps, where requests continue on even though the response will never be used. It's like a memory leak, but one that also uses CPU and server resources. If you missed the last post, here it is:

To see how the leak arises, consider the following JavaScript, that starts two concurrent requests and then waits for their result:

let promise1 = fetch("https://example.com/one")

let promise2 = fetch("https://example.com/two")

return await Promise.all([promise1, promise2])Pretty straightforward code. However, it's code that leaks resources: If one of the requests fail, the returned promise rejects. Meanwhile, the other request, which is now unreachable, will continue expendig server resources until it's ready to deliver the result. Only, nobody is waiting for that result. Everybody's gone home.

Modern JavaScript has a solution to this problem: The AbortSignal. It works by creating an AbortController, which has an associated AbortSignal. The fetch method accepts such a signal, allowing us to cancel the request. Let's fix our JavaScript code:

let controller = new AbortController()

try {

let promise1 = fetch("https://example.com/one", {signal: controller.signal})

let promise2 = fetch("https://example.com/two", {signal: controller.signal})

return await Promise.all([promise1, promise2])

} catch(e) {

controller.abort(e)

throw e

}The code no longer leaks when one of the requests fail. However, the elegance of the original code has been lost. Unfortunately, that's not the end of it.

As soon as we want to run the code above concurrently with some other requests that may fail, we'll hit a dead end: The code does not take in an AbortSignal, it just creates it own. That means we can't abort it when we find out it's no longer needed.

To fix that, it must take in an AbortSignal of its own. It still needs to create a separate AbortController, because we don't necessarily want a single request failure to abort the whole program. That means more bookkeeping: We need to propagate the abort from the outer signal to the inner signal, and this is done via event listeners. It's important to remember to remove that event listener again, or it's another leak. Before we do anything, we need to check if the outer signal has already been aborted, and throw if that's the case. Here it is:

async function fetchTwo(url1, url2, signal) {

if(signal.aborted) throw signal.reason

let controller = new AbortController()

let abort = () => controller.abort(signal.reason)

signal.addEventListener('abort', abort)

try {

let promise1 = fetch(url1, {signal: controller.signal})

let promise2 = fetch(url2, {signal: controller.signal})

return await Promise.all([promise1, promise2])

} catch(e) {

controller.abort(e)

throw e

} finally {

signal.removeEventListener('abort', abort)

}

}Sigh. Our original 3-liner is now completely lost to bookkeeping.

The solution

Fortunately, this problem has already been solved with a pattern known as structured concurrency. Structured concurrency is when subtasks are guaranteed to resolve before their parent task resolves. Thus, they won't be wasting resources when the parent task has long been rejected.

The first step towards structured concurrency is to capture the above pattern as a library function. Let's augment the concurrently function from the previous post, so that it accepts a signal:

async function concurrently(taskSystem, task1, task2, signal) {

if(signal.aborted) throw signal.reason

let controller = new AbortController()

let abort = () => controller.abort(signal.reason)

signal.addEventListener('abort', abort)

try {

let promise1 = task1(controller.signal)

let promise2 = task2(controller.signal)

let array = await Promise.all([promise1, promise2])

return {first: array[0], second: array[1]}

} catch(e) {

controller.abort(e)

throw e

} finally {

signal.removeEventListener('abort', abort)

}

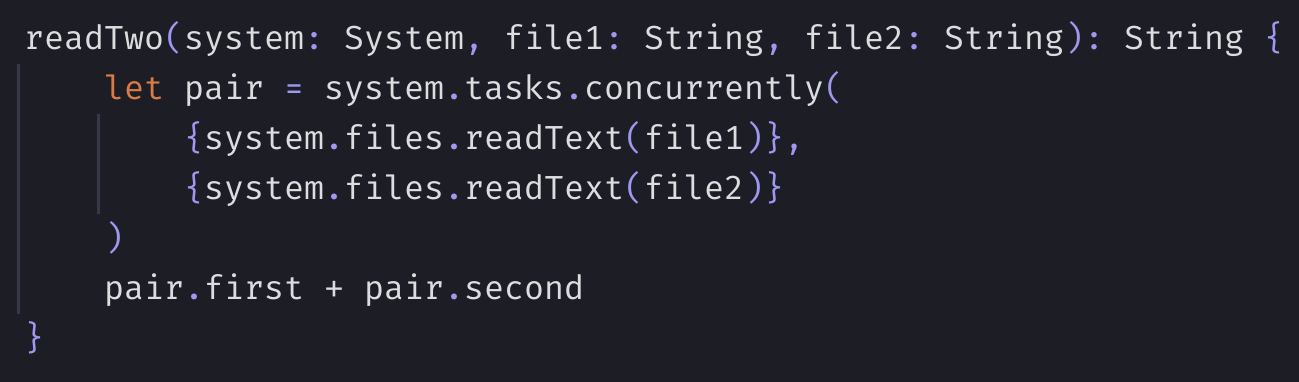

}But where does signal come from? Consider the Firefly code example from the last post:

readTwo(system: System, file1: String, file2: String): String {

let pair = system.tasks.concurrently(

{system.files.readText(file1)},

{system.files.readText(file2)}

)

pair.first + pair.second

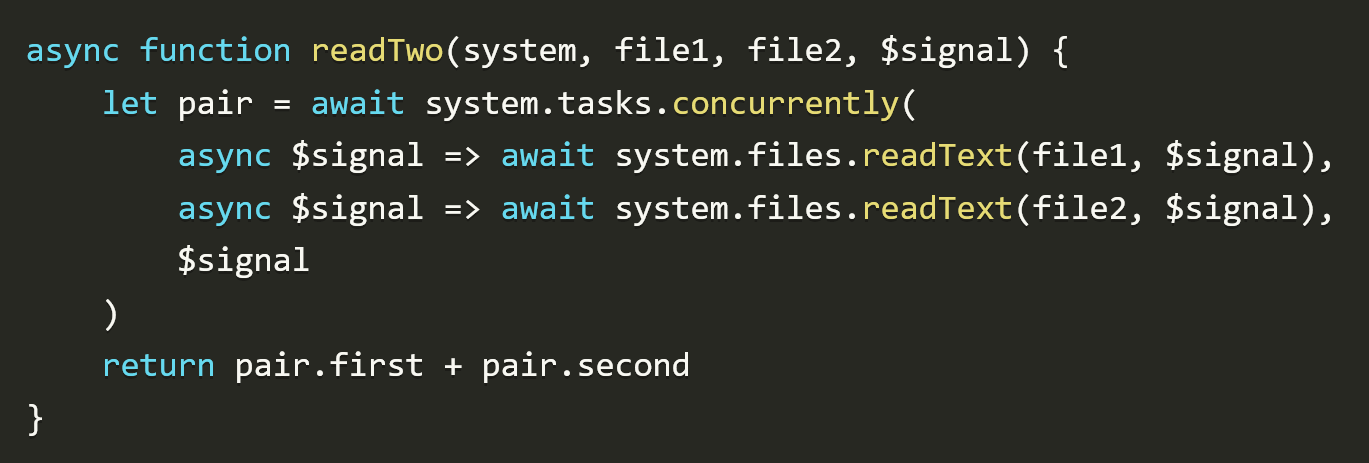

}Last time, we found out where to add async and await to convert this Firefly code to asynchronous JavaScript. In order to pass the signal around, we'll alter the code emitter slightly, so that it adds a $signal argument to every async function, and then pass that as an extra argument to every call we await:

async function readTwo(system, file1, file2, $signal) {

let pair = await system.tasks.concurrently(

async $signal => await system.files.readText(file1, $signal),

async $signal => await system.files.readText(file2, $signal),

$signal

)

return pair.first + pair.second

}With this change to the standard library and code generation, we won't have to change our code from last time at all – it's already leak free.

And we're done.